Why composable commerce won’t fix your scaling challenges

Retailers across the globe are embracing composable commerce for the agility and flexibility it offers. But successfully scaling and maintaining reliability with composable architecture is complex, expensive, and sometimes impossible. Discover five reasons composable won't fix your scaling challenges, why composable companies continue to crash under load, and how a virtual waiting room can protect your web app in ways scaling and autoscaling can’t.

Composable commerce has become the standard for how ecommerce enterprises build and approach their architecture.

With composable, retailers can more easily adapt to consumer trends, adopt new best-in-class technologies, and build a tech stack perfectly tailored to their needs.

Composable gives retailers the flexibility and agility needed to scale their businesses.

But scaling an ecommerce business is different to scaling its infrastructure.

That’s why, after the difficult process of migration, the main challenges of composable commerce come on or in preparation for retailers’ busiest days—Black Friday, a product drop, a brand collab, a surprise sale, etc.

When traffic surges suddenly, or bots launch an attack, the complexity of composable makes it difficult to scale uniformly, test and troubleshoot problems, fail gracefully, or quickly restore service.

Discover the five challenges to handling traffic spikes with composable architecture, hear concrete examples of why composable companies continue to crash under load, and learn how a virtual waiting room can protect your web app in ways scaling and autoscaling can’t.

Key composable commerce challenges at scale

In the shift towards composable, third-party services are increasingly becoming points of failure for companies across industries:

- 62% of enterprise CIOs and IT managers say multivendor environments cause more downtime than a single source

- 42% of IT experts say their organization has suffered an outage in the past three years that was caused by a problem with a third-party vendor

By increasing the number of critical services in your tech stack, you increase the risk that one critical part of your tech stack fails.

This is due to simple math. The availability SLA of a single vendor becomes diluted each time you add a new one that is critical to your customer journey:

- If you depend on one monolithic service provider with an availability of 99.9%, then that’s your availability

- If you depend on two SaaS vendors which each have an availability of 99.9%, your availability drops to 99.8% (99.9% x 99.9% = 99.8%)

- If you depend on ten SaaS vendors which each have an availability of 99.9%, then your availability drops to 99%

99% may still sound pretty good, but that 0.9% drop in availability corresponds to an additional three days and four hours (76 hours) of downtime per year.

Most enterprises report that downtime costs exceeding $300,000 per hour. So if you're anything like most enterprises, that 0.9% drop in availability can cost you up to $22.8 million annually.

RELATED: The Cost of Downtime: IT Outages, Brownouts & Your Bottom Line

And that’s just the increase in expected downtime for when vendors meet their SLAs—which they don’t always do.

The Uptime Institute reports that “the frequency of outages (and their duration) strongly suggests that the actual performance of many providers falls short of service level agreements (SLAs). Customers should never consider SLAs (or 99.9x% availability figures) as reliable predictors of future availability.”

This recommendation is affirmed by a survey of the top 2,000 companies globally, which found that the average top company dolls out $16 million in SLA penalties annually.

Retailers need to be extremely careful taking on new vendors that can become single points of failure in the user journey. Each one may appear to be reliable. But a composable approach means relying on a lot of services, all of which are at risk of failing—especially when hit with extreme load.

RELATED: 87 Composable Commerce Statistics That Show The Future Is Composable

You’re only as scalable as your smallest bottleneck. When scaling a composable system for high traffic, you need to ensure each individual service can scale quickly and effectively—or it will become a point of failure.

Many of the companies we work with use Queue-it specifically because they have third-party bottlenecks that either can’t scale at all, or can scale, but for an exorbitant price.

Some of the most common third-party issues we see are with payment gateways, logins, inventory management systems, checkout, authentication and fraud services, and search functionality.

Third-party bottlenecks like these are typically:

- Expensive to scale: You may need to upgrade your ongoing subscription tiers (often billed monthly or annually) with one or several vendors simply to handle a temporary traffic spike.

- Impossible to scale: We’ve had dozens of customers come to us because they work with a third-party vendor that’s critical to their site, but which simply can’t scale beyond a set threshold.

- Tough to identify: Composable introduces more change and complexity to your architecture, which makes it more challenging to identify potential performance bottlenecks via testing. One Queue-it customer, for example, controlled the flow of traffic to all their key bottlenecks using the virtual waiting room and kept their systems online during surging demand. But then something unexpected happened: they hit their daily limit for email send-outs. They couldn’t send out confirmation emails because that service became a new bottleneck.

RELATED: 3 Autoscaling Challenges & How to Overcome Them with a Virtual Waiting Room

Plus, even if you find the bottleneck, notify the vendor, and upgrade your subscription, you still have no real guarantees.

One of our customers (before they started working with Queue-it) faced this challenge on their biggest day of the year. Before their sale, they upgraded their subscription with a key vendor (vendor X) and got an appropriate throughput agreement.

But on the day of the sale, vendor X crashed anyway. It turned out the vendor X hadn’t told one of their downstream third-party vendors (vendor Y) there was going to be increased demand. So vendor Y crashed, which brought down vendor X, which brought down our customer’s main site.

The point is: the more distinct services that are critical to your user journey, the more time and money you must spend identifying and scaling each one for high-traffic events, and the more risk you introduce.

"Not all components of a technical stack can scale automatically, sometimes the tech part of some components cannot react as fast as the traffic is coming. We have campaigns that start at a precise hour … and in less than 10 seconds, you have all the traffic coming at the same time. Driving this kind of autoscaling is not trivial."

ALEXANDRE BRANQUART, CIO/CTO

To uncover your bottlenecks and discover issues that emerge under load, you need comprehensive load and performance testing. But composable can make testing more difficult, for three key reasons:

- Increased rate of change: If you want to maintain high levels of reliability, then the composable approach of “constant change” also means “constant testing”—which can become extremely expensive for DevOps and SRE teams.

- Increased complexity: With a more complex system, your performance tests must simulate a more complex user journey that draws on more services.

- Increased cost: To run performance tests on third parties, you typically need to pay them to set up the test environment and collect or create test data.

We’ve already seen how one company did thorough testing but dealt with a bottleneck in their email service provider. But they’re just one of many Queue-it customers who’ve faced issues with third parties after doing what they believed to be appropriate load testing.

RELATED: Everything You Need To Know About Load Testing

British ecommerce brand LeMieux, for example, load tested their site and set up a virtual waiting room to flow traffic to the site at exactly the rate it could handle.

But on the morning of their Black Friday sale, their site experienced significant slowdowns. They realized they’d not load tested their third-party search and filter features.

Because they had a virtual waiting room in place, they could decrease the flow of traffic to the site quickly and resolve the issue. But their story provides a valuable lesson for those running tests: test every component.

"We believed previous problems were caused by volume of people using the site. But it’s so important to understand how they interact with the site and therefore the amount of queries that go back to the servers."

JODIE BRATCHELL, ECOMMERCE & DIGITAL PLATFORMS ADMINISTRATOR, LEMIEUX

But even if you manage to run a load test that tests every component, there’s still the challenge of accurately simulating true user behavior.

If our work on thousands of high-traffic online events has taught us one thing, it’s that traffic doesn’t hit websites in the uniform patterns of a load test. To illustrate, here’s a few examples of real-world traffic spikes from our customers:

- Rapha’s traffic increased 50x (from 18 to 900) in a single minute during a brand collaboration drop

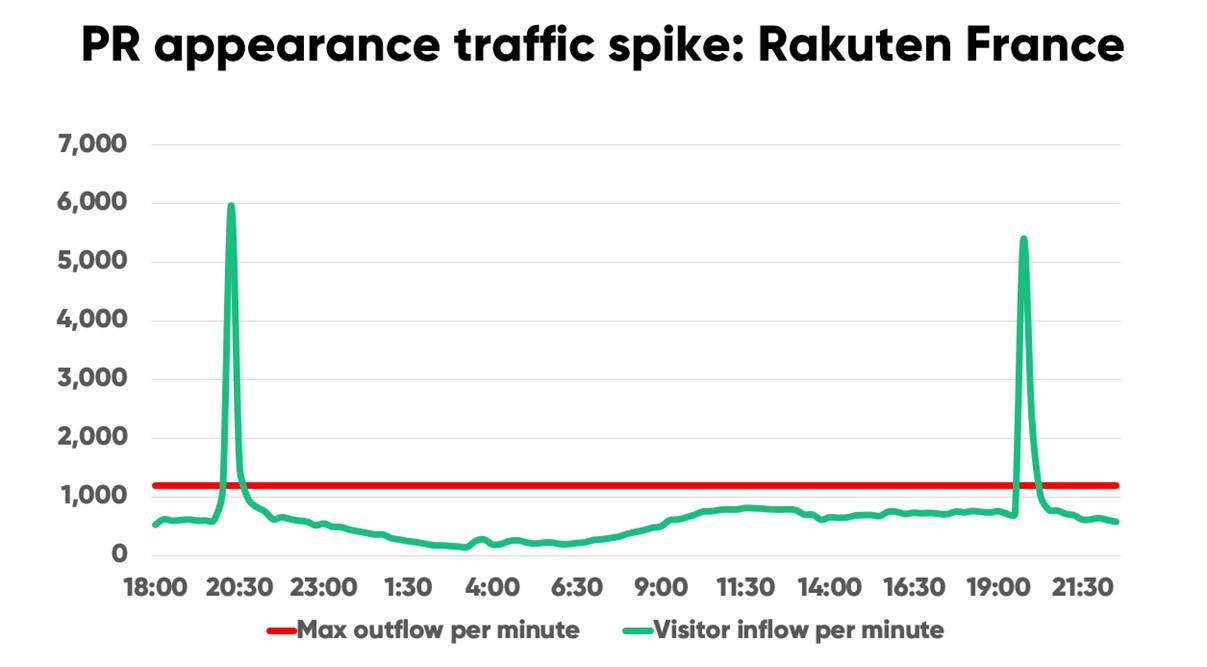

- Rakuten France’s traffic spiked 819% in two minutes when they appeared on the national news

- Peach Aviation’s traffic shot up 4x (from 1,000 to 4,000) in less than 10 minutes during an airline ticket sale

- When one of our customers ran a Super Bowl ad, their traffic spiked 23x in two minutes

RELATED: A TV spot spiked Rakuten France’s traffic 819% in 2 minutes. Here’s how it went.

You can’t accurately predict traffic spikes like these. It’s complex and expensive enough to scale your own servers and databases for a 23x traffic peak. But with composable, you need to ask this of potentially dozens of vendors.

In all likelihood, you’ll either overestimate traffic, and in turn overspend on scaling. Or underestimate traffic, and pay the price of a website crash.

“In our experience, the servers respond to load tests in a way that gives you a false sense of what’s possible. My hypothesis is that it’s because load testing is so programmatic and systematic. Whereas in real life there’s a huge randomness factor that can’t be simulated.”

ROSHAN ODHAVJI, CO-FOUNDER AND CEO

Composable commerce gives you little control over what happens when systems fail. This occurs because each vendor has different rules for what happens when it fails. Some return error pages, others spinning wheels, others never load.

The advantage of decoupled systems compared to monolithic ones is that a single failure doesn’t have to bring down your whole site or app. But the disadvantage is that each service has its own rules, and partial failures can create even more frustration and unfairness than total failures. As Puma’s Ecommerce Manager says:

“The reliability bit is currently the hardest thing about composable, because every vendor has their strengths, but they think in isolation.”

If your inventory management system gets overloaded, you can oversell products and be forced to cancel orders.

If your payment gateway fails, you’ll have customers that rush to your site, add items to their cart, but simply can’t buy them.

And if your login or loyalty program service fails, you can end up in scenario where your members get a worse experience than those who use guest checkout.

As we’ve covered, site errors are extremely common when traffic spikes suddenly. When you’re building your composable system, it’s important to consider what these errors look like and try to mitigate their impact on the user experience.

RELATED: Overselling: Why Ecommerce Sites Oversell & How To Prevent It

As ecommerce evolves, so too do the attack vectors of those who seek to exploit it. By decoupling your architecture and dealing with more vendors, your potential attack surface consists of more, smaller units, with more access points via APIs.

APIs by nature expose functionality to the outside world. They’re designed for programmatic access to enable automated interaction between platforms, which makes them prime targets for bots.

API abuse has skyrocketed 177% in the past year. Bad actors can target APIs, for example, to bypass the typical user flow and make a direct API call to complete a transaction. Or they could abuse APIs to test stolen credit card information or login details in bulk—44% of account takeover attacks in 2023 targeted APIs specifically.

When bad actors abuse APIs, they often do so in huge volumes, creating hundreds or thousands or requests per minute. These surges in activity are tough to predict or prepare for, meaning they often overload databases or services like login.

One survey found organizations manage an average of 300 APIs—and 70% of developers expect API usage to continue to grow. A Cloudflare report found that almost a third of these APIs are “shadow APIs”—meaning APIs that haven’t been managed or secured by the organization using them.

APIs are critical to modern ecommerce architecture. But the sheer scale of API endpoints not only gives bots more access points, it also makes it more difficult to effectively secure against all vulnerabilities. You need to be conscious of the security and performance implications of each API you develop or use.

RELATED: Fortify Your Bots & Abuse Defenses With Robust Tools From Queue-it

The biggest challenge of composable commerce, for most companies, is transitioning to composable tech and thinking. But after this migration, the main challenges of the composable approach typically emerge on or in preparation for retailers’ biggest days. Composable can make it more difficult and expensive to scale quickly, test properly, fail gracefully, and ensure security.

With composable, the Silicon Valley mantra of “Move fast and break things” has hit the ecommerce world. Most companies now believe that to stay agile and competitive, they need to accept downtime risk:

- 78% of tech respondents at the top 2,000 global companies say they accept downtime risk in order to adopt new technologies

- 40% of CMOs say they value speed-to-market over security and reliability

But it is possible to move fast without breaking things.

That’s why major retailers like The North Face, SNIPES, and Zalando, use a virtual waiting room—so they can control their online traffic and run large-scale online events without the fear of failure.

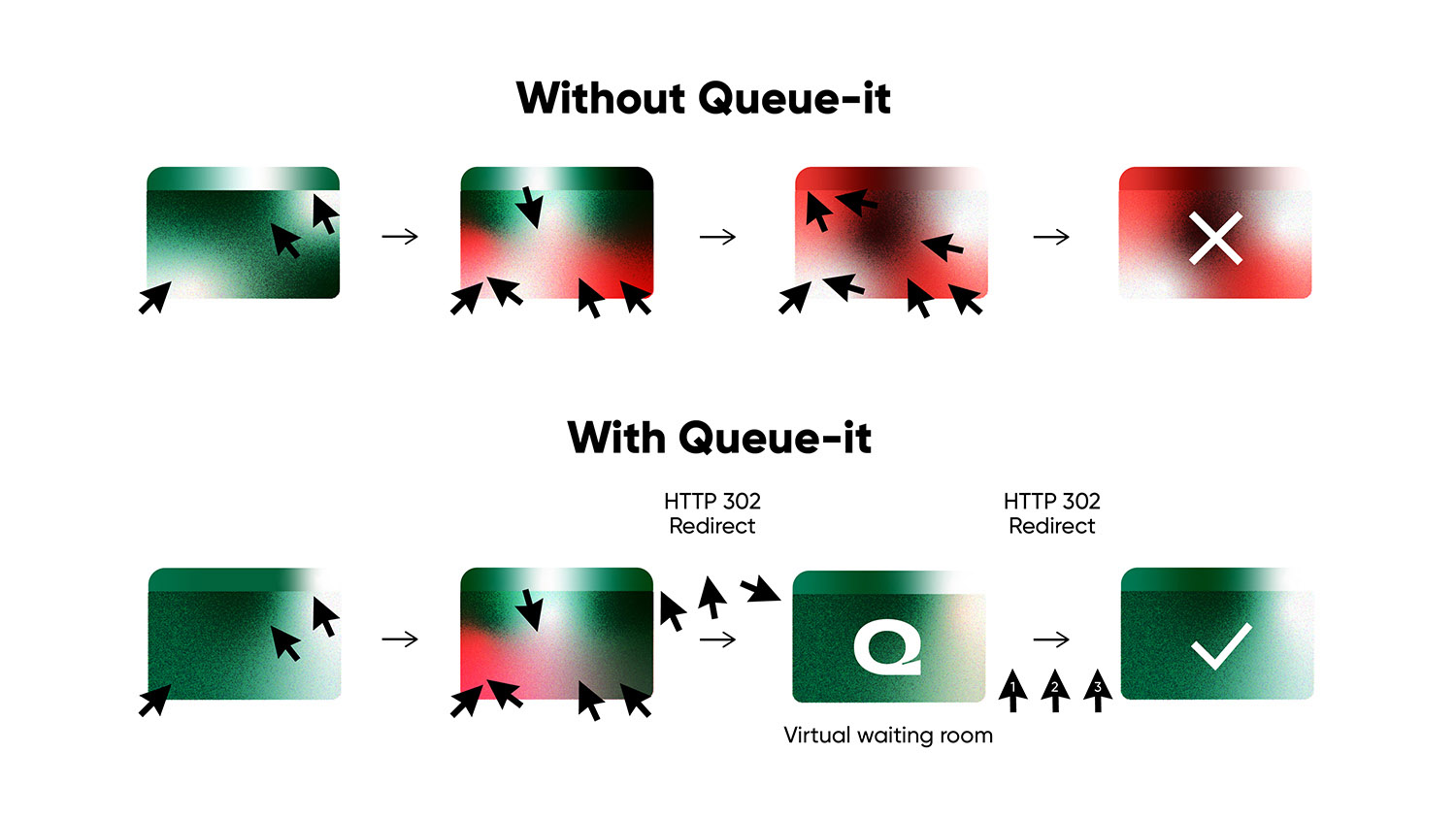

A virtual waiting room is a cloud-native SaaS solution that prevents website crashes and ensures fairness in high-demand situations like Black Friday sales, ticket onsales, and product drops and launches.

Virtual waiting rooms work by automatically redirecting visitors to a waiting room when they perform an action the business wants to manage—such as visiting a landing page or proceeding to checkout—or when traffic spikes to levels that threaten site performance.

Retailers can choose the exact rate that visitors are flowed back to the target page or website, giving you complete control over traffic flow, scaling costs, the user experience, and API vulnerabilities.

RELATED: Everything You Need To Know About Virtual Waiting Rooms

Virtual waiting rooms let your existing web app act like one that’s purpose built for high-traffic events and sudden surges in traffic.

With a virtual waiting room, you can control the rate at which visitors access your entire site, a landing page, or a key bottleneck like login or payment gateway. You can flatten the curve of sudden traffic peaks to ensure activity never exceeds what your site is built to handle.

When Rakuten France appeared on national news, for example, their site traffic spiked 819% in two minutes.

Their traffic threshold—determined by load testing—was 1,200 per minute. Their PR appearances saw traffic 5x that number, hitting 6,000 new users per minute.

Because Rakuten had a virtual waiting room, these sudden spikes didn’t crash their site. Instead, users were automatically placed in the waiting room then flowed to the site in fair first-in, first-out order at the 1,200 per minute rate configured by the DevOps team.

“Queue-it’s virtual waiting room reacts instantaneously to our peaks before they impact the site experience. It lets us avoid creating a bunch of machines just to handle a 3-minute traffic peak, which saves us time and money.”

THIBAUD SIMOND, INFRASTRUCTURE MANAGER

By controlling the flow of traffic, you can take control over the otherwise unpredictable costs of scaling to operate at max capacity without the risk of failure.

Virtual waiting rooms let you avoid the expense and risk of scaling both your infrastructure and that of the third parties you rely on. And if you have a bottleneck or a third party that simply can’t scale, you can configure traffic to never exceed the levels it can handle.

In a recent survey, Queue-it customers reported an average saving of 38% on server scaling costs and 33% on database scaling costs.

As a bolt-on, easy-to-use solution, setting up a virtual waiting room is far easier than preparing your website for large, unpredictable traffic spikes. Once you integrate Queue-it into your tech stack, you can set up a new waiting room within minutes.

Our customers report a 48% decrease in staff needed on-call for events, which enables increased productivity and frees up time to focus on delivering broader business value.

RELATED: Queue-it Customer Survey: Real Virtual Waiting Room Results From Real Customers

“Autoscaling doesn’t always react fast enough to ensure we stay online. Plus, it’s very expensive to autoscale every time there’s high traffic. If we did this for every traffic spike, it would cost more than double what we pay for Queue-it. So Queue-it was just the better approach, both in terms of reliability and cost.”

MIJAIL PAZ, HEAD OF TECHNOLOGY

With a virtual waiting room, you can ensure traffic hits your web app in the same uniform pattern you’ve simulated in your load tests. But as we’ve covered, bottlenecks can be tough to identify, and third-party vendors are never 100% reliable. So what happens when there’s an unexpected problem or partial failure?

With a virtual waiting room, you can control traffic in real-time. This means you can slow or pause the flow of traffic to your site the moment a problem occurs. It gives you the time and freedom to fix problems and adjust traffic flow on-the-fly.

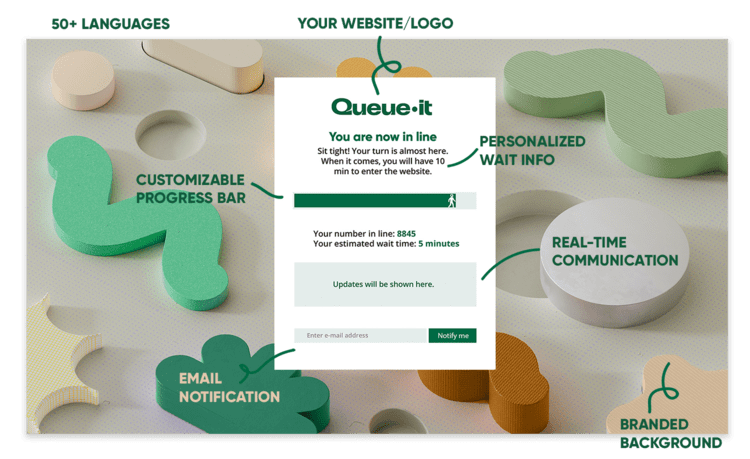

Instead of overselling products, error pages, and partial failures, all users get the same consistent branded experience with detailed wait information. Plus, you can send real-time messages to all visitors in the waiting room, keeping them informed.

“Queue-it lets us push our servers right to the edge without going over it. It lets us adjust the traffic outflow on the fly to maximize throughput without compromising reliability, which gives us peace of mind during large onsales.”

ROSHAN ODHAVJI, CO-FOUNDER AND CEO

After years of work with some of the world’s biggest brands on their busiest days, our team realized that to run successful large-scale events, companies need to control not only surges in genuine customer activity, but also in bad bot activity.

That’s why alongside protecting your site from surging demand, Queue-it offers a suite of multi-layered bots and abuse defenses that can stop bots before they even access your site.

Just like an airport security checkpoint screens passengers before they board their flights, a virtual waiting room acts as a checkpoint between your web page and the purchase path.

Controlling the flow of visitors lets you defeat bots' speed and volume advantage and allows you to run checks and block bots before they even get access to the sale.

In the context of composable architecture and API attacks, Queue-it’s enqueue token feature is perhaps the most effective at ensuring bad actors don’t abuse API endpoints for a competitive advantage. This is because you can run a check at any point in the user journey that verifies that information such as:

- Whether the user has been through the queue

- Whether they have a valid visitor identification key (such as an account ID, email address, or promo code)

- Whether the email they use at checkout is the same they used to enter the waiting room (for invite-only waiting rooms)

One common botting strategy to “park” on the website ahead of a large event such as a sneaker drop. The bad actor might add a pair of socks to their cart, sit on that page till the sneaker drop goes live, then they’ll use an API call to swap the socks for the newly released sneakers and quickly checkout.

When a visitor such as this tries to complete their transaction, you can call the Queue-it API to verify they have a valid enqueue token, which means they’ve passed through the queue or are checking out with the same details they used to enter the waiting room. If they haven’t, Queue-it can automatically send them to the back of the queue.

You can also check enqueue tokens in your post-sale audits to verify transactions are associated with a visitor identification key before you ship their order.

If you’re concerned about vulnerabilities or abuse during peak traffic events, you can also speak to our technical teams, who’ve worked with hundreds of retailers on identifying weak spots and securing their websites against malicious activity.

RELATED: Stop Bad Bots With Queue-it's Suite of Bots & Abuse Mitigation Tools

All of this isn’t to say composable is bad. It’s been embraced by retailers across the globe for good reason. Composable enables flexibility, agility, and a better customer experience.

But composable is far from the “cure-all” it’s sometimes painted as. In many cases, it increases downtime and security risks, the costs of scaling, and development resources—especially during or in preparation for high-traffic events.

A virtual waiting room gives you the control you need to ensure composable systems don’t fall prey to partial failures and runaway scaling costs. It’s a bolt-on solution that empowers you to think big and build fast without breaking things.

Queue-it is the market-leading developer of virtual waiting room services, providing support to some of the world’s biggest retailers, from The North Face, to Zalando, to SNIPES.

Our virtual waiting room and the customers that use it are our core focus. We provide 24/7/365 support from across three global offices and have a 9.7/10 Quality of Support rating on G2 from over 100 reviews. Over the past 10 years, our 1,000+ customers have had over 75 billion visitors pass through our waiting rooms.

Book a demo today to discover how you can get peace of mind and control during high-demand events.